mirror of

https://github.com/AbdBarho/stable-diffusion-webui-docker.git

synced 2025-12-14 22:35:10 -05:00

Compare commits

13 Commits

8.2.0

...

7a6f5e1374

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7a6f5e1374 | ||

|

|

0f3187505b | ||

|

|

25d8d0c008 | ||

|

|

6a0366cf45 | ||

|

|

802d0bcd68 | ||

|

|

b1a26b8041 | ||

|

|

f1bf3b0943 | ||

|

|

35a18b3d46 | ||

|

|

887e49c495 | ||

|

|

7051ce0a44 | ||

|

|

ac94eac2b5 | ||

|

|

015c2ec829 | ||

|

|

245d1d443f |

1

.github/workflows/docker.yml

vendored

1

.github/workflows/docker.yml

vendored

@@ -14,7 +14,6 @@ jobs:

|

||||

matrix:

|

||||

profile:

|

||||

- auto

|

||||

- invoke

|

||||

- comfy

|

||||

- download

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

@@ -18,14 +18,6 @@ This repository provides multiple UIs for you to play around with stable diffusi

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

### [InvokeAI](https://github.com/invoke-ai/InvokeAI)

|

||||

|

||||

[Full feature list here](https://github.com/invoke-ai/InvokeAI#features), Screenshots:

|

||||

|

||||

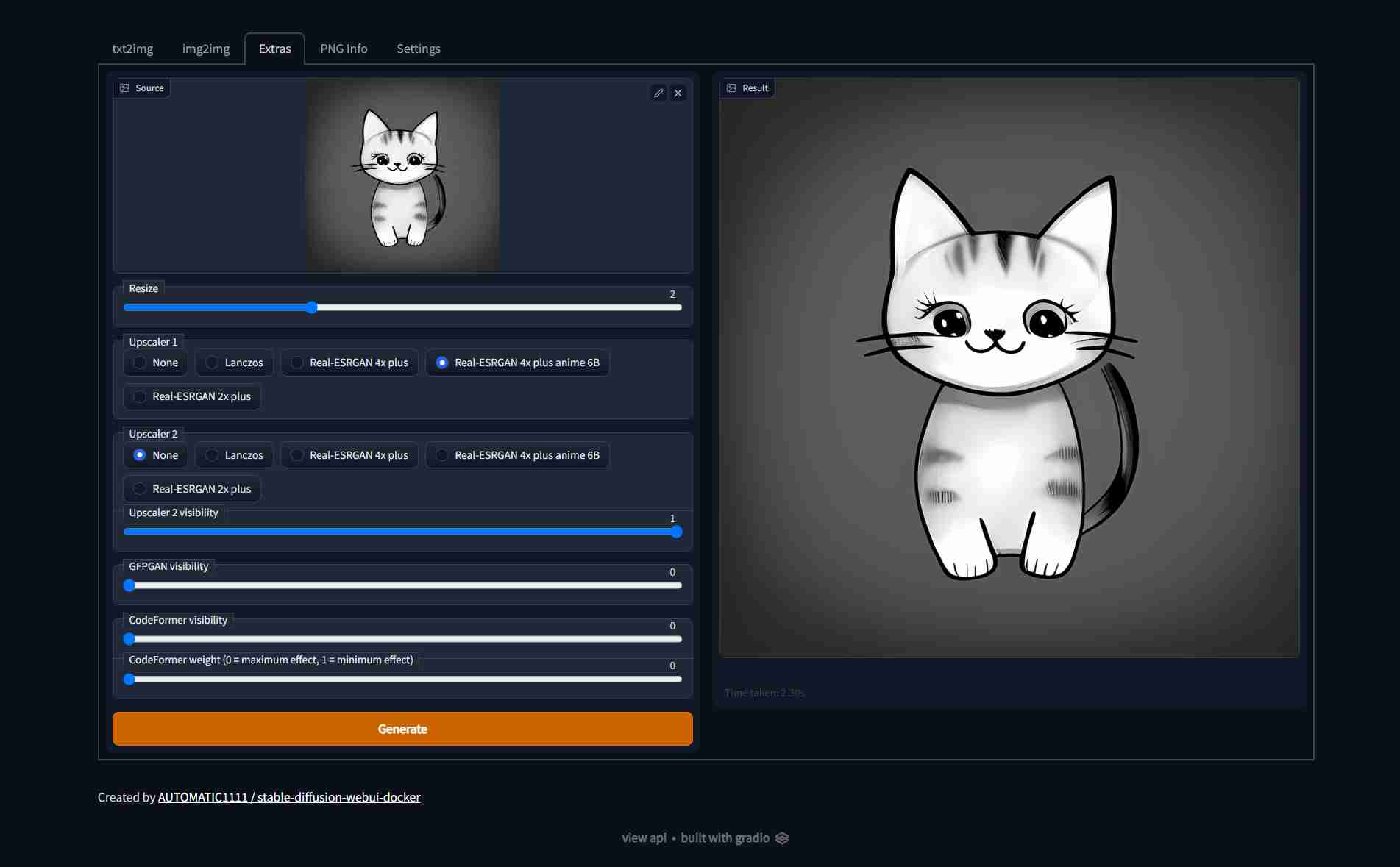

| Text to image | Image to image | Extras |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

### [ComfyUI](https://github.com/comfyanonymous/ComfyUI)

|

||||

|

||||

[Full feature list here](https://github.com/comfyanonymous/ComfyUI#features), Screenshot:

|

||||

|

||||

@@ -1,5 +1,3 @@

|

||||

version: '3.9'

|

||||

|

||||

x-base_service: &base_service

|

||||

ports:

|

||||

- "${WEBUI_PORT:-7860}:7860"

|

||||

@@ -29,7 +27,7 @@ services:

|

||||

<<: *base_service

|

||||

profiles: ["auto"]

|

||||

build: ./services/AUTOMATIC1111

|

||||

image: sd-auto:71

|

||||

image: sd-auto:78

|

||||

environment:

|

||||

- CLI_ARGS=--allow-code --medvram --xformers --enable-insecure-extension-access --api

|

||||

|

||||

@@ -40,27 +38,11 @@ services:

|

||||

environment:

|

||||

- CLI_ARGS=--no-half --precision full --allow-code --enable-insecure-extension-access --api

|

||||

|

||||

invoke: &invoke

|

||||

<<: *base_service

|

||||

profiles: ["invoke"]

|

||||

build: ./services/invoke/

|

||||

image: sd-invoke:30

|

||||

environment:

|

||||

- PRELOAD=true

|

||||

- CLI_ARGS=--xformers

|

||||

|

||||

# invoke-cpu:

|

||||

# <<: *invoke

|

||||

# profiles: ["invoke-cpu"]

|

||||

# environment:

|

||||

# - PRELOAD=true

|

||||

# - CLI_ARGS=--always_use_cpu

|

||||

|

||||

comfy: &comfy

|

||||

<<: *base_service

|

||||

profiles: ["comfy"]

|

||||

build: ./services/comfy/

|

||||

image: sd-comfy:6

|

||||

image: sd-comfy:7

|

||||

environment:

|

||||

- CLI_ARGS=

|

||||

|

||||

|

||||

@@ -2,20 +2,19 @@ FROM alpine/git:2.36.2 as download

|

||||

|

||||

COPY clone.sh /clone.sh

|

||||

|

||||

RUN . /clone.sh stable-diffusion-webui-assets https://github.com/AUTOMATIC1111/stable-diffusion-webui-assets.git 6f7db241d2f8ba7457bac5ca9753331f0c266917

|

||||

|

||||

RUN . /clone.sh stable-diffusion-stability-ai https://github.com/Stability-AI/stablediffusion.git cf1d67a6fd5ea1aa600c4df58e5b47da45f6bdbf \

|

||||

&& rm -rf assets data/**/*.png data/**/*.jpg data/**/*.gif

|

||||

|

||||

RUN . /clone.sh CodeFormer https://github.com/sczhou/CodeFormer.git c5b4593074ba6214284d6acd5f1719b6c5d739af \

|

||||

&& rm -rf assets inputs

|

||||

|

||||

RUN . /clone.sh BLIP https://github.com/salesforce/BLIP.git 48211a1594f1321b00f14c9f7a5b4813144b2fb9

|

||||

RUN . /clone.sh k-diffusion https://github.com/crowsonkb/k-diffusion.git ab527a9a6d347f364e3d185ba6d714e22d80cb3c

|

||||

RUN . /clone.sh clip-interrogator https://github.com/pharmapsychotic/clip-interrogator 2cf03aaf6e704197fd0dae7c7f96aa59cf1b11c9

|

||||

RUN . /clone.sh generative-models https://github.com/Stability-AI/generative-models 45c443b316737a4ab6e40413d7794a7f5657c19f

|

||||

RUN . /clone.sh stable-diffusion-webui-assets https://github.com/AUTOMATIC1111/stable-diffusion-webui-assets 6f7db241d2f8ba7457bac5ca9753331f0c266917

|

||||

|

||||

|

||||

FROM pytorch/pytorch:2.1.2-cuda12.1-cudnn8-runtime

|

||||

FROM pytorch/pytorch:2.3.0-cuda12.1-cudnn8-runtime

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

|

||||

@@ -31,7 +30,7 @@ WORKDIR /

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git && \

|

||||

cd stable-diffusion-webui && \

|

||||

git reset --hard cf2772fab0af5573da775e7437e6acdca424f26e && \

|

||||

git reset --hard v1.9.4 && \

|

||||

pip install -r requirements_versions.txt

|

||||

|

||||

|

||||

@@ -39,16 +38,14 @@ ENV ROOT=/stable-diffusion-webui

|

||||

|

||||

COPY --from=download /repositories/ ${ROOT}/repositories/

|

||||

RUN mkdir ${ROOT}/interrogate && cp ${ROOT}/repositories/clip-interrogator/clip_interrogator/data/* ${ROOT}/interrogate

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install -r ${ROOT}/repositories/CodeFormer/requirements.txt

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install pyngrok xformers \

|

||||

pip install pyngrok xformers==0.0.26.post1 \

|

||||

git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379 \

|

||||

git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1 \

|

||||

git+https://github.com/mlfoundations/open_clip.git@v2.20.0

|

||||

|

||||

# there seems to be a memory leak (or maybe just memory not being freed fast eno8ugh) that is fixed by this version of malloc

|

||||

# there seems to be a memory leak (or maybe just memory not being freed fast enough) that is fixed by this version of malloc

|

||||

# maybe move this up to the dependencies list.

|

||||

RUN apt-get -y install libgoogle-perftools-dev && apt-get clean

|

||||

ENV LD_PRELOAD=libtcmalloc.so

|

||||

|

||||

@@ -1,19 +1,92 @@

|

||||

FROM pytorch/pytorch:2.1.2-cuda12.1-cudnn8-runtime

|

||||

FROM pytorch/pytorch:2.3.1-cuda12.1-cudnn8-runtime

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

# Limited system user UID

|

||||

ARG USE_UID=991

|

||||

# Limited system user GID

|

||||

ARG USE_GID=991

|

||||

# Latest tag or bleeding edge commit

|

||||

ARG USE_EDGE=false

|

||||

# ComfyUI-GGUF

|

||||

ARG USE_GGUF=false

|

||||

# x-flux-comfyui

|

||||

ARG USE_XFLUX=false

|

||||

# comfyui_controlnet_aux

|

||||

ARG USE_CNAUX=false

|

||||

# krita-ai-diffusion

|

||||

ARG USE_KRITA=false

|

||||

# ComfyUI_IPAdapter_plus

|

||||

ARG USE_IPAPLUS=false

|

||||

# comfyui-inpaint-nodes

|

||||

ARG USE_INPAINT=false

|

||||

# comfyui-tooling-nodes

|

||||

ARG USE_TOOLING=false

|

||||

|

||||

RUN apt-get update && apt-get install -y git && apt-get clean

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1 USE_EDGE=$USE_EDGE

|

||||

ENV USE_GGUF=$USE_GGUF USE_XFLUX=$USE_XFLUX ROOT=/stable-diffusion

|

||||

ENV CACHE=/home/app/.cache USE_CNAUX=$USE_CNAUX USE_KRITA=$USE_KRITA

|

||||

ENV USE_IPAPLUS=$USE_IPAPLUS USE_INPAINT=$USE_INPAINT USE_TOOLING=$USE_TOOLING

|

||||

|

||||

ENV ROOT=/stable-diffusion

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

# User/Group

|

||||

RUN groupadd -r app -g ${USE_GID} && useradd --no-log-init -m -r -g app app -u ${USE_UID} && \

|

||||

mkdir -p ${ROOT} && chown ${USE_UID}:${USE_GID} ${ROOT} && mkdir -p ${CACHE}/pip && chown -R ${USE_UID}:${USE_GID} ${CACHE}

|

||||

RUN --mount=type=cache,uid=${USE_UID},gid=${USE_GID},target=${CACHE} chown -R ${USE_UID}:${USE_UID} ${CACHE}

|

||||

|

||||

RUN apt-get update && apt-get install -y git && ([ "${USE_XFLUX}" = "true" ] && apt-get install -y libgl1-mesa-glx python3-opencv) && apt-get clean

|

||||

|

||||

USER app:app

|

||||

ENV PATH="${PATH}:/home/app/.local/bin"

|

||||

|

||||

RUN --mount=type=cache,uid=${USE_UID},gid=${USE_GID},target=${CACHE} pip --cache-dir=${CACHE}/pip install -U pip

|

||||

|

||||

RUN --mount=type=cache,uid=${USE_UID},gid=${USE_GID},target=${CACHE} \

|

||||

git clone https://github.com/comfyanonymous/ComfyUI.git ${ROOT} && \

|

||||

cd ${ROOT} && \

|

||||

git checkout master && \

|

||||

git reset --hard d1f3637a5a944d0607b899babd8ff11d87100503 && \

|

||||

pip install -r requirements.txt

|

||||

bash -c 'VERSION=$(git describe --tags --abbrev=0) && \

|

||||

if [ "${USE_EDGE}" = "true" ]; then VERSION=$(git describe --abbrev=7); fi && \

|

||||

git reset --hard ${VERSION}' && \

|

||||

pip --cache-dir=${CACHE}/pip install -r requirements.txt && \

|

||||

if [ "${USE_KRITA}" = "true" ]; then \

|

||||

pip --cache-dir=${CACHE}/pip install aiohttp tqdm && \

|

||||

git clone https://github.com/Acly/krita-ai-diffusion.git && \

|

||||

cd krita-ai-diffusion && git checkout main && \

|

||||

git submodule update --init && cd ..; \

|

||||

export USE_CNAUX="true" USE_IPAPLUS="true" \

|

||||

USE_INPAINT="true" USE_TOOLING="true"; \

|

||||

fi; \

|

||||

if [ "${USE_GGUF}" = "true" ]; then \

|

||||

git clone https://github.com/city96/ComfyUI-GGUF.git && \

|

||||

cd ComfyUI-GGUF && git checkout main && \

|

||||

pip --cache-dir=${CACHE}/pip install -r requirements.txt && cd ..; \

|

||||

fi; \

|

||||

if [ "${USE_XFLUX}" = "true" ]; then \

|

||||

git clone https://github.com/XLabs-AI/x-flux-comfyui.git && \

|

||||

cd x-flux-comfyui && git checkout main && \

|

||||

pip --cache-dir=${CACHE}/pip install -r requirements.txt && cd ..; \

|

||||

fi; \

|

||||

if [ "${USE_CNAUX}" = "true" ]; then \

|

||||

git clone https://github.com/Fannovel16/comfyui_controlnet_aux.git && \

|

||||

cd comfyui_controlnet_aux && git checkout main && \

|

||||

pip --cache-dir=${CACHE}/pip install -r requirements.txt && \

|

||||

# This extra step to separate onnxruntime installation is required to restore onnx cuda support \

|

||||

pip --cache-dir=${CACHE}/pip install onnxruntime && pip --cache-dir=${CACHE}/pip install onnxruntime-gpu && cd ..; \

|

||||

fi; \

|

||||

if [ "${USE_IPAPLUS}" = "true" ]; then \

|

||||

git clone https://github.com/cubiq/ComfyUI_IPAdapter_plus.git && \

|

||||

cd ComfyUI_IPAdapter_plus && git checkout main && cd ..; \

|

||||

fi; \

|

||||

if [ "${USE_INPAINT}" = "true" ]; then \

|

||||

git clone https://github.com/Acly/comfyui-inpaint-nodes.git && \

|

||||

cd comfyui-inpaint-nodes && git checkout main && \

|

||||

pip --cache-dir=${CACHE}/pip install opencv-python && cd ..; \

|

||||

fi; \

|

||||

if [ "${USE_TOOLING}" = "true" ]; then \

|

||||

git clone https://github.com/Acly/comfyui-tooling-nodes.git && \

|

||||

cd comfyui-tooling-nodes && git checkout main && cd ..; \

|

||||

fi

|

||||

|

||||

WORKDIR ${ROOT}

|

||||

COPY . /docker/

|

||||

COPY --chown=${USE_UID}:${USE_GID} . /docker/

|

||||

RUN chmod u+x /docker/entrypoint.sh && cp /docker/extra_model_paths.yaml ${ROOT}

|

||||

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all PYTHONPATH="${PYTHONPATH}:${PWD}" CLI_ARGS=""

|

||||

|

||||

@@ -2,11 +2,12 @@

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

mkdir -vp /data/config/comfy/custom_nodes

|

||||

CUSTOM_NODES="/data/config/comfy/custom_nodes"

|

||||

mkdir -vp "${CUSTOM_NODES}"

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

MOUNTS["/root/.cache"]="/data/.cache"

|

||||

MOUNTS["${CACHE}"]="/data/.cache"

|

||||

MOUNTS["${ROOT}/input"]="/data/config/comfy/input"

|

||||

MOUNTS["${ROOT}/output"]="/output/comfy"

|

||||

|

||||

@@ -22,6 +23,43 @@ for to_path in "${!MOUNTS[@]}"; do

|

||||

echo Mounted $(basename "${from_path}")

|

||||

done

|

||||

|

||||

|

||||

if [ "${USE_KRITA}" = "true" ]; then

|

||||

if [ "${KRITA_DOWNLOAD_MODELS:-false}" = "true" ]; then

|

||||

cd "${ROOT}/krita-ai-diffusion/scripts" && python scripts/download_models.py --recommended /data && cd ..

|

||||

cd "${ROOT}/models/" mv -v upscale_models upscale_models.stock && ln -sT /data/models/upscale_models upscale_models

|

||||

fi

|

||||

export USE_CNAUX="true" USE_IPAPLUS="true" USE_INPAINT="true"; USE_TOOLING="true"

|

||||

fi

|

||||

if [ "${USE_GGUF}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/ComfyUI-GGUF" ] && mv "${ROOT}/ComfyUI-GGUF" "${CUSTOM_NODES}"/

|

||||

fi

|

||||

if [ "${USE_XFLUX}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/x-flux-comfyui" ] && mv "${ROOT}/x-flux-comfyui" "${CUSTOM_NODES}"/

|

||||

[ ! -e "/data/models/clip_vision" ] && mkdir -p /data/models/clip_vision

|

||||

[ ! -e "/data/models/clip_vision/model.safetensors" ] && cd /data/models/clip_vision && \

|

||||

python -c 'import sys; from urllib.request import urlopen; from pathlib import Path; Path(sys.argv[2]).write_bytes(urlopen("".join([sys.argv[1],sys.argv[2]])).read())' \

|

||||

"https://huggingface.co/openai/clip-vit-large-patch14/resolve/main/" "model.safetensors"

|

||||

[ ! -e "/data/models/xlabs" ] && mkdir -p /data/models/xlabs/{ipadapters,loras,controlnets}

|

||||

[ ! -e "/data/models/xlabs/ipadapters/flux-ip-adapter.safetensors" ] && cd /data/models/xlabs/ipadapters && \

|

||||

python -c 'import sys; from urllib.request import urlopen; from pathlib import Path; Path(sys.argv[2]).write_bytes(urlopen("".join([sys.argv[1],sys.argv[2]])).read())' \

|

||||

"https://huggingface.co/XLabs-AI/flux-ip-adapter/resolve/main/" "flux-ip-adapter.safetensors"

|

||||

[ -d "${ROOT}/models/xlabs" ] && rm -rf "${ROOT}/models/xlabs"

|

||||

[ ! -e "${ROOT}/models/xlabs" ] && cd "${ROOT}/models" && ln -sT /data/models/xlabs xlabs && cd ..

|

||||

fi

|

||||

if [ "${USE_CNAUX}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/comfyui_controlnet_aux" ] && mv "${ROOT}/comfyui_controlnet_aux" "${CUSTOM_NODES}"/

|

||||

fi

|

||||

if [ "${USE_IPAPLUS}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/ComfyUI_IPAdapter_plus" ] && mv "${ROOT}/ComfyUI_IPAdapter_plus" "${CUSTOM_NODES}"/

|

||||

fi

|

||||

if [ "${USE_INPAINT}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/comfyui-inpaint-nodes" ] && mv "${ROOT}/comfyui-inpaint-nodes" "${CUSTOM_NODES}"/

|

||||

fi

|

||||

if [ "${USE_TOOLING}" = "true" ]; then

|

||||

[ ! -e "${CUSTOM_NODES}/comfyui-tooling-nodes" ] && mv "${ROOT}/comfyui-tooling-nodes" "${CUSTOM_NODES}"/

|

||||

fi

|

||||

|

||||

if [ -f "/data/config/comfy/startup.sh" ]; then

|

||||

pushd ${ROOT}

|

||||

. /data/config/comfy/startup.sh

|

||||

|

||||

@@ -15,11 +15,15 @@ a111:

|

||||

gligen: models/GLIGEN

|

||||

clip: models/CLIPEncoder

|

||||

embeddings: embeddings

|

||||

unet: models/unet

|

||||

clip_vision: models/clip_vision

|

||||

xlabs: models/xlabs

|

||||

inpaint: models/inpaint

|

||||

ipadapter: models/ipadapter

|

||||

|

||||

custom_nodes: config/comfy/custom_nodes

|

||||

|

||||

# TODO: I am unsure about these, need more testing

|

||||

# style_models: config/comfy/style_models

|

||||

# t2i_adapter: config/comfy/t2i_adapter

|

||||

# clip_vision: config/comfy/clip_vision

|

||||

# diffusers: config/comfy/diffusers

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

FROM bash:alpine3.15

|

||||

FROM bash:alpine3.19

|

||||

|

||||

RUN apk add parallel aria2

|

||||

RUN apk update && apk add parallel aria2

|

||||

COPY . /docker

|

||||

RUN chmod +x /docker/download.sh

|

||||

ENTRYPOINT ["/docker/download.sh"]

|

||||

|

||||

@@ -1,53 +0,0 @@

|

||||

FROM alpine:3.17 as xformers

|

||||

RUN apk add --no-cache aria2

|

||||

RUN aria2c -x 5 --dir / --out wheel.whl 'https://github.com/AbdBarho/stable-diffusion-webui-docker/releases/download/6.0.0/xformers-0.0.21.dev544-cp310-cp310-manylinux2014_x86_64-pytorch201.whl'

|

||||

|

||||

|

||||

FROM pytorch/pytorch:2.0.1-cuda11.7-cudnn8-runtime

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_EXISTS_ACTION=w PIP_PREFER_BINARY=1

|

||||

|

||||

# patch match:

|

||||

# https://github.com/invoke-ai/InvokeAI/blob/main/docs/installation/INSTALL_PATCHMATCH.md

|

||||

RUN --mount=type=cache,target=/var/cache/apt \

|

||||

apt-get update && \

|

||||

apt-get install make g++ git libopencv-dev -y && \

|

||||

apt-get clean && \

|

||||

cd /usr/lib/x86_64-linux-gnu/pkgconfig/ && \

|

||||

ln -sf opencv4.pc opencv.pc

|

||||

|

||||

|

||||

ENV ROOT=/InvokeAI

|

||||

RUN git clone https://github.com/invoke-ai/InvokeAI.git ${ROOT}

|

||||

WORKDIR ${ROOT}

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

git reset --hard f3b2e02921927d9317255b1c3811f47bd40a2bf9 && \

|

||||

pip install -e .

|

||||

|

||||

|

||||

ARG BRANCH=main SHA=f3b2e02921927d9317255b1c3811f47bd40a2bf9

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

git fetch && \

|

||||

git reset --hard && \

|

||||

git checkout ${BRANCH} && \

|

||||

git reset --hard ${SHA} && \

|

||||

pip install -U -e .

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

--mount=type=bind,from=xformers,source=/wheel.whl,target=/xformers-0.0.21-cp310-cp310-linux_x86_64.whl \

|

||||

pip install -U opencv-python-headless triton /xformers-0.0.21-cp310-cp310-linux_x86_64.whl && \

|

||||

python3 -c "from patchmatch import patch_match"

|

||||

|

||||

|

||||

COPY . /docker/

|

||||

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all

|

||||

ENV PYTHONUNBUFFERED=1 PRELOAD=false HF_HOME=/root/.cache/huggingface CONFIG_DIR=/data/config/invoke CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

|

||||

ENTRYPOINT ["/docker/entrypoint.sh"]

|

||||

CMD invokeai --web --host 0.0.0.0 --port 7860 --root_dir ${ROOT} --config ${CONFIG_DIR}/models.yaml \

|

||||

--outdir /output/invoke --embedding_directory /data/embeddings/ --lora_directory /data/models/Lora \

|

||||

--no-nsfw_checker --no-safety_checker ${CLI_ARGS}

|

||||

|

||||

@@ -1,45 +0,0 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

mkdir -p ${CONFIG_DIR} ${ROOT}/configs/stable-diffusion/

|

||||

|

||||

# cache

|

||||

MOUNTS["/root/.cache"]=/data/.cache/

|

||||

|

||||

# this is really just a hack to avoid migrations

|

||||

rm -rf ${HF_HOME}/diffusers

|

||||

|

||||

# ui specific

|

||||

MOUNTS["${ROOT}/models/codeformer"]=/data/models/Codeformer/

|

||||

MOUNTS["${ROOT}/models/gfpgan/GFPGANv1.4.pth"]=/data/models/GFPGAN/GFPGANv1.4.pth

|

||||

MOUNTS["${ROOT}/models/gfpgan/weights"]=/data/models/GFPGAN/

|

||||

MOUNTS["${ROOT}/models/realesrgan"]=/data/models/RealESRGAN/

|

||||

|

||||

MOUNTS["${ROOT}/models/ldm"]=/data/.cache/invoke/ldm/

|

||||

|

||||

# hacks

|

||||

|

||||

for to_path in "${!MOUNTS[@]}"; do

|

||||

set -Eeuo pipefail

|

||||

from_path="${MOUNTS[${to_path}]}"

|

||||

rm -rf "${to_path}"

|

||||

mkdir -p "$(dirname "${to_path}")"

|

||||

# ends with slash, make it!

|

||||

if [[ "$from_path" == */ ]]; then

|

||||

mkdir -vp "$from_path"

|

||||

fi

|

||||

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

echo Mounted $(basename "${from_path}")

|

||||

done

|

||||

|

||||

if "${PRELOAD}" == "true"; then

|

||||

set -Eeuo pipefail

|

||||

invokeai-configure --root ${ROOT} --yes

|

||||

cp ${ROOT}/configs/models.yaml ${CONFIG_DIR}/models.yaml

|

||||

fi

|

||||

|

||||

exec "$@"

|

||||

Reference in New Issue

Block a user