mirror of

https://github.com/AbdBarho/stable-diffusion-webui-docker.git

synced 2025-10-27 08:14:26 -04:00

Compare commits

113 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b5cdf299ca | ||

|

|

db831ece65 | ||

|

|

ceeac61cb0 | ||

|

|

19972f3cac | ||

|

|

0c16c105bc | ||

|

|

78c90e5435 | ||

|

|

6a3826c80a | ||

|

|

56d9763a73 | ||

|

|

41e0dcc2f6 | ||

|

|

5a9d305e5c | ||

|

|

d70e96da71 | ||

|

|

d97d257fd3 | ||

|

|

c3cf8129a9 | ||

|

|

b8256cccd2 | ||

|

|

4969906ec8 | ||

|

|

8201e361fa | ||

|

|

1423b274b1 | ||

|

|

87a51e9fd1 | ||

|

|

bdee804112 | ||

|

|

f1a1641add | ||

|

|

8df9d10a58 | ||

|

|

7a1e52bc7a | ||

|

|

d20b8732b3 | ||

|

|

23757d2356 | ||

|

|

9e7979b756 | ||

|

|

8623c73741 | ||

|

|

9b6750b2f6 | ||

|

|

5e3f20ba43 | ||

|

|

53ac3601d7 | ||

|

|

37feff58bb | ||

|

|

427320475b | ||

|

|

9a60522244 | ||

|

|

887a16ef35 | ||

|

|

0a4c2a34b8 | ||

|

|

73cd69075e | ||

|

|

b33c0d4bcf | ||

|

|

5450583be1 | ||

|

|

1cfb915d12 | ||

|

|

fb9d1e579c | ||

|

|

9092aa233b | ||

|

|

a5218b8639 | ||

|

|

d6cbafdca8 | ||

|

|

4464e9d9e9 | ||

|

|

fb5407a6bc | ||

|

|

5b4acd605d | ||

|

|

48f8650fd8 | ||

|

|

31c21025ea | ||

|

|

1211e9c5de | ||

|

|

49ad173e95 | ||

|

|

5122f83c0f | ||

|

|

3c544dd7f4 | ||

|

|

42cc17da74 | ||

|

|

31e4dec08f | ||

|

|

0148e5e109 | ||

|

|

111825ac25 | ||

|

|

c1e13867d9 | ||

|

|

463f332d14 | ||

|

|

3682303355 | ||

|

|

402c691a49 | ||

|

|

b36113b7d8 | ||

|

|

b60c787474 | ||

|

|

161fd52c16 | ||

|

|

3b3c244c31 | ||

|

|

5698c49653 | ||

|

|

710280c7ab | ||

|

|

e1e03229fd | ||

|

|

79868d88e8 | ||

|

|

6f5eef42a7 | ||

|

|

14c4b36aff | ||

|

|

28f171e64d | ||

|

|

9af4a23ec4 | ||

|

|

24ecd676ab | ||

|

|

ef36c50cf9 | ||

|

|

43a5e5e85f | ||

|

|

5bbc21ea3d | ||

|

|

09366ed955 | ||

|

|

d4874e7c3a | ||

|

|

7638fb4e5e | ||

|

|

15a61a99d6 | ||

|

|

556a50f49b | ||

|

|

b899f4e516 | ||

|

|

a8c85b4699 | ||

|

|

a96285d10b | ||

|

|

83b78fe504 | ||

|

|

84f9cb84e7 | ||

|

|

6a66ff6abb | ||

|

|

59892da866 | ||

|

|

fceb83c2b0 | ||

|

|

17b01a7627 | ||

|

|

b96d7c30d0 | ||

|

|

aae83bb8f2 | ||

|

|

10763a8f61 | ||

|

|

64e8f093d2 | ||

|

|

3e0a137c23 | ||

|

|

a1c16942ff | ||

|

|

6ae3473214 | ||

|

|

5d731cb43c | ||

|

|

c1fa2f1457 | ||

|

|

d8cfdd3af5 | ||

|

|

03d12cbcd9 | ||

|

|

2e76b6c4e7 | ||

|

|

5eae2076ce | ||

|

|

725e1f39ba | ||

|

|

ab651fe0d7 | ||

|

|

f76f8d4671 | ||

|

|

e32a48f42a | ||

|

|

76989b39a6 | ||

|

|

4d9fc381bb | ||

|

|

bcee253fe0 | ||

|

|

499143009a | ||

|

|

c614625f04 | ||

|

|

ccd6e238b2 | ||

|

|

829864af9b |

6

.devscripts/chmod.sh

Executable file

6

.devscripts/chmod.sh

Executable file

@@ -0,0 +1,6 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

find services -name "*.sh" -exec git update-index --chmod=+x {} \;

|

||||

find .devscripts -name "*.sh" -exec git update-index --chmod=+x {} \;

|

||||

30

.devscripts/migratev1tov2.sh

Executable file

30

.devscripts/migratev1tov2.sh

Executable file

@@ -0,0 +1,30 @@

|

||||

mkdir -p data/.cache data/StableDiffusion data/Codeformer data/GFPGAN data/ESRGAN data/BSRGAN data/RealESRGAN data/SwinIR data/LDSR data/embeddings

|

||||

|

||||

cp -vf cache/models/model.ckpt data/StableDiffusion/model.ckpt

|

||||

|

||||

cp -vf cache/models/LDSR.ckpt data/LDSR/model.ckpt

|

||||

cp -vf cache/models/LDSR.yaml data/LDSR/project.yaml

|

||||

|

||||

cp -vf cache/models/RealESRGAN_x4plus.pth data/RealESRGAN/

|

||||

cp -vf cache/models/RealESRGAN_x4plus_anime_6B.pth data/RealESRGAN/

|

||||

|

||||

cp -vrf cache/torch data/.cache/

|

||||

|

||||

mkdir -p data/.cache/huggingface/transformers/

|

||||

cp -vrf cache/transformers/* data/.cache/huggingface/transformers/

|

||||

|

||||

cp -v cache/custom-models/* data/StableDiffusion/

|

||||

|

||||

mkdir -p data/.cache/clip/

|

||||

cp -vf cache/weights/ViT-L-14.pt data/.cache/clip/

|

||||

|

||||

cp -vf cache/weights/codeformer.pth data/Codeformer/codeformer-v0.1.0.pth

|

||||

|

||||

cp -vf cache/weights/detection_Resnet50_Final.pth data/.cache/

|

||||

cp -vf cache/weights/parsing_parsenet.pth data/.cache/

|

||||

|

||||

cp -v embeddings/* data/embeddings/

|

||||

|

||||

echo this script was created 10/2022

|

||||

echo Dont forget to run: docker compose --profile download up --build

|

||||

echo the cache and embeddings folders can be deleted, but its not necessary.

|

||||

9

.devscripts/migratev3tov4.sh

Executable file

9

.devscripts/migratev3tov4.sh

Executable file

@@ -0,0 +1,9 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

echo "Moving everything in output to output/old..."

|

||||

mv output old

|

||||

mkdir output

|

||||

mv old/.gitignore output

|

||||

mv old output

|

||||

32

.github/ISSUE_TEMPLATE/bug.md

vendored

32

.github/ISSUE_TEMPLATE/bug.md

vendored

@@ -1,29 +1,43 @@

|

||||

---

|

||||

name: Bug

|

||||

about: Report a bug

|

||||

title: ''

|

||||

title: ""

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

assignees: ""

|

||||

---

|

||||

|

||||

**Has this issue been opened before? Check the [FAQ](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/Main), the [issues](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues?q=is%3Aissue) and in [the issues in the WebUI repo](https://github.com/hlky/stable-diffusion-webui)**

|

||||

<!-- PLEASE FILL THIS OUT, IT WILL MAKE BOTH OF OUR LIVES EASIER -->

|

||||

|

||||

**Has this issue been opened before?**

|

||||

|

||||

- [ ] It is not in the [FAQ](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/FAQ), I checked.

|

||||

- [ ] It is not in the [issues](https://github.com/AbdBarho/stable-diffusion-webui-docker/issues?q=), I searched.

|

||||

|

||||

**Describe the bug**

|

||||

|

||||

<!-- tried to run the app, my cat exploded -->

|

||||

|

||||

**Which UI**

|

||||

|

||||

auto or auto-cpu or invoke or sygil?

|

||||

|

||||

**Hardware / Software**

|

||||

|

||||

- OS: [e.g. Windows 10 / Ubuntu 22.04]

|

||||

- OS version: <!-- on windows, use the command `winver` to find out, on ubuntu `lsb_release -d` -->

|

||||

- WSL version (if applicable): <!-- get using `wsl -l -v` -->

|

||||

- Docker Version: <!-- get using `docker version` -->

|

||||

- Docker compose version: <!-- get using `docker compose version` -->

|

||||

- Repo version: <!-- tag, commit sha, or "from master" -->

|

||||

- RAM:

|

||||

- GPU/VRAM:

|

||||

|

||||

**Steps to Reproduce**

|

||||

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Hardware / Software:**

|

||||

- OS: [e.g. Windows / Ubuntu and version]

|

||||

- GPU: [Nvidia 1660 / No GPU]

|

||||

- Version [e.g. 22]

|

||||

|

||||

**Additional context**

|

||||

Any other context about the problem here. If applicable, add screenshots to help explain your problem.

|

||||

|

||||

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: Feature request? Questions regarding some extension?

|

||||

url: https://github.com/AbdBarho/stable-diffusion-webui-docker/discussions

|

||||

about: Please use the discussions tab

|

||||

13

.github/pull_request_template.md

vendored

Normal file

13

.github/pull_request_template.md

vendored

Normal file

@@ -0,0 +1,13 @@

|

||||

<!--

|

||||

Have you created an issue before opening a merge request???

|

||||

https://github.com/AbdBarho/stable-diffusion-webui-docker#contributing

|

||||

Please create one so we can discuss it, I don't want your effort to go to waste.

|

||||

-->

|

||||

|

||||

Closes issue #

|

||||

|

||||

### Update versions

|

||||

|

||||

- auto: https://github.com/AUTOMATIC1111/stable-diffusion-webui/commit/

|

||||

- sygil: https://github.com/Sygil-Dev/sygil-webui/commit/

|

||||

- invoke: https://github.com/invoke-ai/InvokeAI/commit/

|

||||

36

.github/workflows/docker.yml

vendored

36

.github/workflows/docker.yml

vendored

@@ -1,24 +1,24 @@

|

||||

name: Build Image

|

||||

name: Build Images

|

||||

|

||||

on: [push]

|

||||

on:

|

||||

push:

|

||||

branches: master

|

||||

pull_request:

|

||||

paths:

|

||||

- docker-compose.yml

|

||||

- services

|

||||

|

||||

# TODO: how to cache intermediate images?

|

||||

jobs:

|

||||

build_hlky:

|

||||

build:

|

||||

strategy:

|

||||

matrix:

|

||||

profile:

|

||||

- auto

|

||||

- sygil

|

||||

- invoke

|

||||

- download

|

||||

runs-on: ubuntu-latest

|

||||

name: Build hlky

|

||||

name: ${{ matrix.profile }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- run: docker compose build --progress plain

|

||||

build_AUTOMATIC1111:

|

||||

runs-on: ubuntu-latest

|

||||

name: Build AUTOMATIC1111

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- run: cd AUTOMATIC1111 && docker compose build --progress plain

|

||||

build_lstein:

|

||||

runs-on: ubuntu-latest

|

||||

name: Build lstein

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- run: cd lstein && docker compose build --progress plain

|

||||

- run: docker compose --profile ${{ matrix.profile }} build --progress plain

|

||||

|

||||

20

.github/workflows/stale.yml

vendored

Normal file

20

.github/workflows/stale.yml

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

name: 'Close stale issues and PRs'

|

||||

on:

|

||||

schedule:

|

||||

- cron: '0 0 * * *'

|

||||

|

||||

jobs:

|

||||

stale:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/stale@v6

|

||||

with:

|

||||

only-labels: awaiting-response

|

||||

stale-issue-message: This issue is stale because it has been open 14 days with no activity. Remove stale label or comment or this will be closed in 7 days.

|

||||

stale-pr-message: This PR is stale because it has been open 14 days with no activity. Remove stale label or comment or this will be closed in 7 days.

|

||||

close-issue-message: This issue was closed because it has been stalled for 7 days with no activity.

|

||||

close-pr-message: This PR was closed because it has been stalled for 7 days with no activity.

|

||||

days-before-issue-stale: 14

|

||||

days-before-pr-stale: 14

|

||||

days-before-issue-close: 7

|

||||

days-before-pr-close: 7

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1,2 +1,2 @@

|

||||

/dev

|

||||

/.devcontainer

|

||||

/docker-compose.override.yml

|

||||

|

||||

@@ -1,44 +0,0 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM alpine/git:2.36.2 as download

|

||||

RUN <<EOF

|

||||

# who knows

|

||||

git config --global http.postBuffer 1048576000

|

||||

git clone https://github.com/CompVis/stable-diffusion.git repositories/stable-diffusion

|

||||

git clone https://github.com/CompVis/taming-transformers.git repositories/taming-transformers

|

||||

rm -rf repositories/taming-transformers/data repositories/taming-transformers/assets

|

||||

EOF

|

||||

|

||||

FROM pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime

|

||||

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive

|

||||

RUN apt-get update && apt-get install git -y && apt-get clean

|

||||

|

||||

RUN <<EOF

|

||||

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

|

||||

cd stable-diffusion-webui

|

||||

git reset --hard 064965c4660f57f24e2d51a9854defaeabf8c0cf

|

||||

pip install -U --prefer-binary --no-cache-dir -r requirements.txt

|

||||

EOF

|

||||

|

||||

RUN <<EOF

|

||||

pip install --prefer-binary -U --no-cache-dir diffusers numpy invisible-watermark git+https://github.com/crowsonkb/k-diffusion.git \

|

||||

git+https://github.com/TencentARC/GFPGAN.git markupsafe==2.0.1 opencv-python-headless

|

||||

EOF

|

||||

|

||||

|

||||

ENV ROOT=/workspace/stable-diffusion-webui \

|

||||

WORKDIR=/workspace/stable-diffusion-webui/repositories/stable-diffusion \

|

||||

TRANSFORMERS_CACHE=/cache/transformers TORCH_HOME=/cache/torch CLI_ARGS=""

|

||||

|

||||

COPY --from=download /git/ ${ROOT}

|

||||

|

||||

|

||||

COPY . /docker

|

||||

|

||||

WORKDIR ${WORKDIR}

|

||||

EXPOSE 7860

|

||||

# run, -u to not buffer stdout / stderr

|

||||

CMD /docker/mount.sh && python3 -u ../../webui.py --listen ${CLI_ARGS}

|

||||

@@ -1,12 +0,0 @@

|

||||

# WebUI for AUTOMATIC1111

|

||||

|

||||

The WebUI of [AUTOMATIC1111/stable-diffusion-webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui) as docker container!

|

||||

|

||||

## Setup

|

||||

|

||||

Clone this repo, download the `model.ckpt` and `GFPGANv1.3.pth` and put into the `models` folder as mentioned in [the main README](../README.md), then run

|

||||

|

||||

```

|

||||

cd AUTOMATIC1111

|

||||

docker compose up --build

|

||||

```

|

||||

@@ -1,3 +0,0 @@

|

||||

{

|

||||

"outdir_samples": "/output"

|

||||

}

|

||||

@@ -1,20 +0,0 @@

|

||||

version: '3.9'

|

||||

|

||||

services:

|

||||

model:

|

||||

build: .

|

||||

ports:

|

||||

- "7860:7860"

|

||||

volumes:

|

||||

- ../cache:/cache

|

||||

- ../output:/output

|

||||

- ../models:/models

|

||||

environment:

|

||||

- CLI_ARGS=--medvram --opt-split-attention

|

||||

deploy:

|

||||

resources:

|

||||

reservations:

|

||||

devices:

|

||||

- driver: nvidia

|

||||

device_ids: ['0']

|

||||

capabilities: [gpu]

|

||||

@@ -1,28 +0,0 @@

|

||||

#!/bin/bash

|

||||

|

||||

declare -A MODELS

|

||||

|

||||

MODELS["${WORKDIR}/models/ldm/stable-diffusion-v1/model.ckpt"]=model.ckpt

|

||||

MODELS["${ROOT}/GFPGANv1.3.pth"]=GFPGANv1.3.pth

|

||||

|

||||

for path in "${!MODELS[@]}"; do

|

||||

name=${MODELS[$path]}

|

||||

base=$(dirname "${path}")

|

||||

from_path="/models/${name}"

|

||||

if test -f "${from_path}"; then

|

||||

mkdir -p "${base}" && ln -sf "${from_path}" "${path}" && echo "Mounted ${name}"

|

||||

else

|

||||

echo "Skipping ${name}"

|

||||

fi

|

||||

done

|

||||

|

||||

# force realesrgan cache

|

||||

rm -rf /opt/conda/lib/python3.7/site-packages/realesrgan/weights

|

||||

ln -s -T /models /opt/conda/lib/python3.7/site-packages/realesrgan/weights

|

||||

|

||||

# force facexlib cache

|

||||

mkdir -p /cache/weights/ ${WORKDIR}/gfpgan/

|

||||

ln -sf /cache/weights/ ${WORKDIR}/gfpgan/

|

||||

|

||||

# mount config

|

||||

ln -sf /docker/config.json ${WORKDIR}/config.json

|

||||

2

LICENSE

2

LICENSE

@@ -86,4 +86,4 @@ administration of justice, law enforcement, immigration or asylum

|

||||

processes, such as predicting an individual will commit fraud/crime

|

||||

commitment (e.g. by text profiling, drawing causal relationships between

|

||||

assertions made in documents, indiscriminate and arbitrarily-targeted

|

||||

use).

|

||||

use).

|

||||

|

||||

86

README.md

86

README.md

@@ -1,89 +1,55 @@

|

||||

# Stable Diffusion WebUI Docker

|

||||

# Stable Diffusion WebUI Docker

|

||||

|

||||

Run Stable Diffusion on your machine with a nice UI without any hassle!

|

||||

|

||||

This repository provides the [WebUI](https://github.com/hlky/stable-diffusion-webui) as a docker image for easy setup and deployment.

|

||||

## Setup & Usage

|

||||

|

||||

Now with experimental support for 2 other forks:

|

||||

|

||||

- [AUTOMATIC1111](./AUTOMATIC1111/) (Stable, very few bugs!)

|

||||

- [lstein](./lstein/)

|

||||

Visit the wiki for [Setup](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/Setup) and [Usage](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/Usage) instructions, checkout the [FAQ](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/FAQ) page if you face any problems, or create a new issue!

|

||||

|

||||

## Features

|

||||

|

||||

- Interactive UI with many features, and more on the way!

|

||||

- Support for 6GB GPU cards.

|

||||

- GFPGAN for face reconstruction, RealESRGAN for super-sampling.

|

||||

- Experimental:

|

||||

- Latent Diffusion Super Resolution

|

||||

- GoBig

|

||||

- GoLatent

|

||||

- many more!

|

||||

This repository provides multiple UIs for you to play around with stable diffusion:

|

||||

|

||||

## Setup

|

||||

### [AUTOMATIC1111](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

|

||||

|

||||

Make sure you have an **up to date** version of docker installed. Download this repo and run:

|

||||

[Full feature list here](https://github.com/AUTOMATIC1111/stable-diffusion-webui-feature-showcase), Screenshots:

|

||||

|

||||

```

|

||||

docker compose build

|

||||

```

|

||||

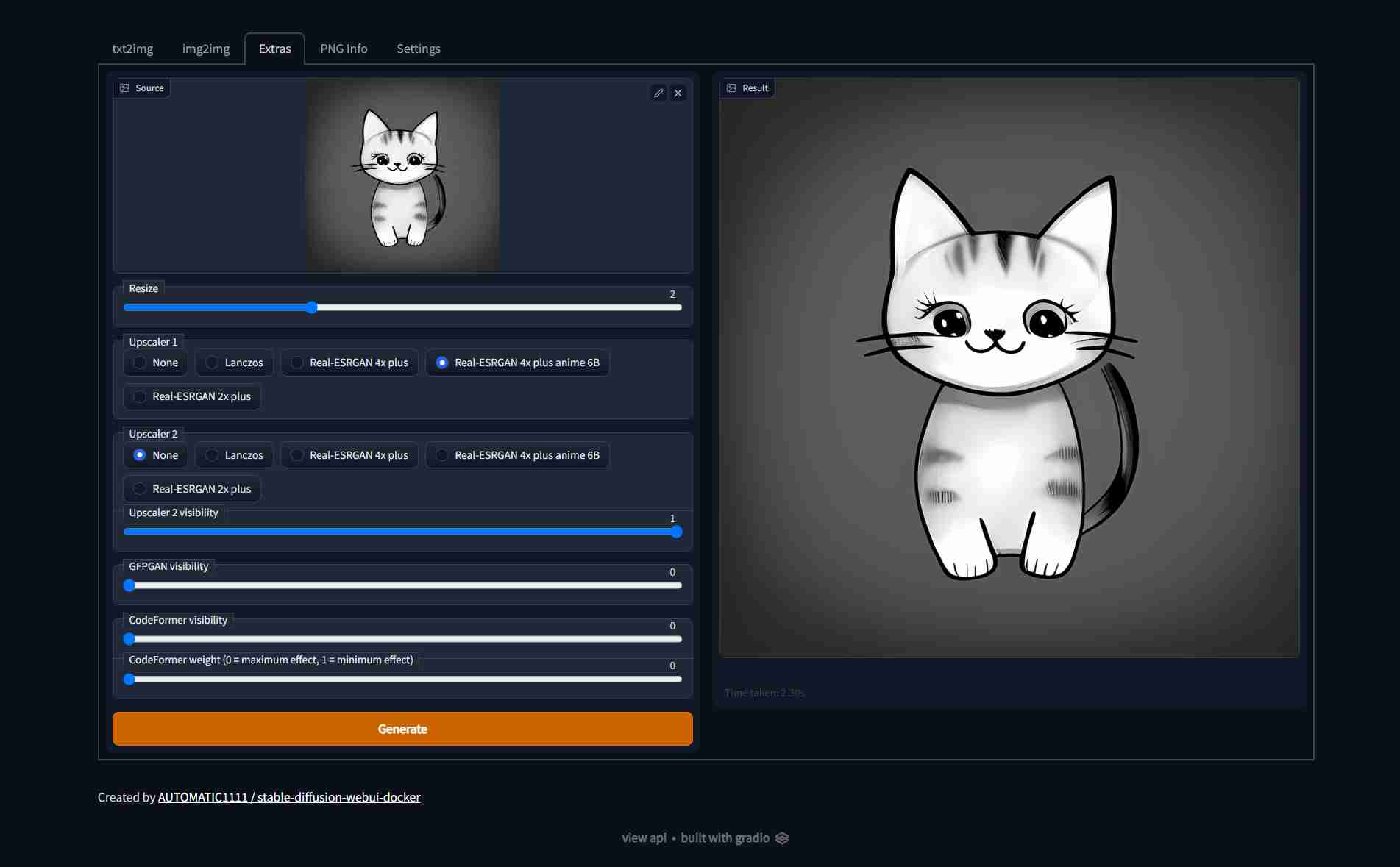

| Text to image | Image to image | Extras |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

you can let it build in the background while you download the different models

|

||||

### [InvokeAI (lstein)](https://github.com/invoke-ai/InvokeAI)

|

||||

|

||||

- [Stable Diffusion v1.4 (4GB)](https://www.googleapis.com/storage/v1/b/aai-blog-files/o/sd-v1-4.ckpt?alt=media), rename to `model.ckpt`

|

||||

- (Optional) [GFPGANv1.3.pth (333MB)](https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth).

|

||||

- (Optional) [RealESRGAN_x4plus.pth (64MB)](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth) and [RealESRGAN_x4plus_anime_6B.pth (18MB)](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth).

|

||||

- (Optional) [LDSR (2GB)](https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1) and [its configuration](https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1), rename to `LDSR.ckpt` and `LDSR.yaml` respectively.

|

||||

<!-- - (Optional) [RealESRGAN_x2plus.pth (64MB)](https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.1/RealESRGAN_x2plus.pth)

|

||||

- TODO: (I still need to find the RealESRGAN_x2plus_6b.pth) -->

|

||||

[Full feature list here](https://github.com/invoke-ai/InvokeAI#features), Screenshots:

|

||||

|

||||

Put all of the downloaded files in the `models` folder, it should look something like this:

|

||||

| Text to image | Image to image | Extras |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

```

|

||||

models/

|

||||

├── model.ckpt

|

||||

├── GFPGANv1.3.pth

|

||||

├── RealESRGAN_x4plus.pth

|

||||

├── RealESRGAN_x4plus_anime_6B.pth

|

||||

├── LDSR.ckpt

|

||||

└── LDSR.yaml

|

||||

```

|

||||

### [Sygil (sd-webui / hlky)](https://github.com/Sygil-Dev/sygil-webui)

|

||||

|

||||

## Run

|

||||

[Full feature list here](https://github.com/Sygil-Dev/sygil-webui/blob/master/README.md), Screenshots:

|

||||

|

||||

After the build is done, you can run the app with:

|

||||

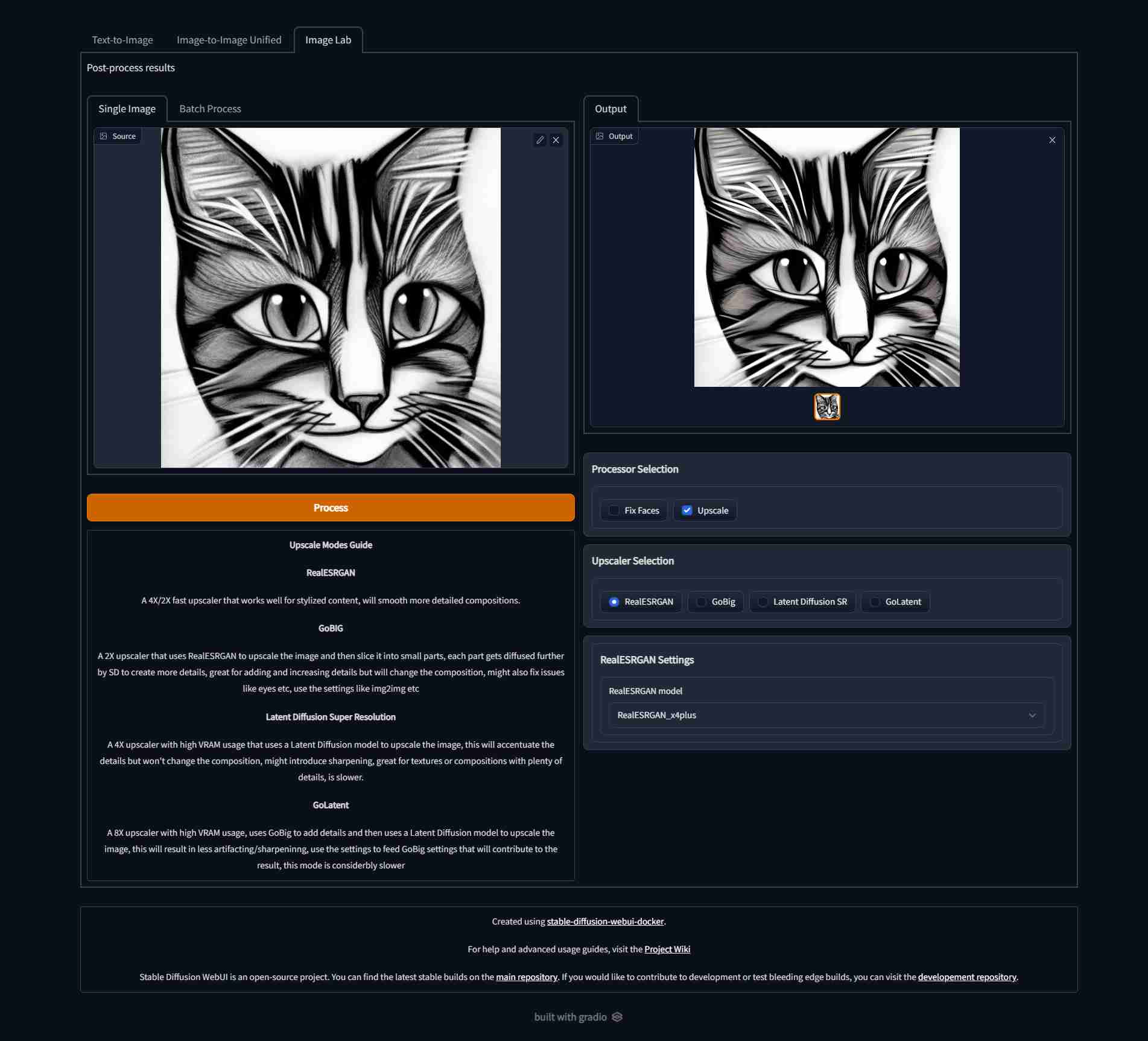

| Text to image | Image to image | Image Lab |

|

||||

| ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------- |

|

||||

|  |  |  |

|

||||

|

||||

```

|

||||

docker compose up --build

|

||||

```

|

||||

## Contributing

|

||||

|

||||

Will start the app on http://localhost:7860/

|

||||

Contributions are welcome! **Create a discussion first of what the problem is and what you want to contribute (before you implement anything)**

|

||||

|

||||

Note: the first start will take sometime as some other models will be downloaded, these will be cached in the `cache` folder, so next runs are faster.

|

||||

|

||||

### FAQ

|

||||

|

||||

You can find fixes to common issues [in the wiki page.](https://github.com/AbdBarho/stable-diffusion-webui-docker/wiki/FAQ)

|

||||

|

||||

## Config

|

||||

|

||||

in the `docker-compose.yml` you can change the `CLI_ARGS` variable, which contains the arguments that will be passed to the WebUI. By default: `--extra-models-cpu --optimized-turbo` are given, which allow you to use this model on a 6GB GPU. However, some features might not be available in the mode. [You can find the full list of arguments here.](https://github.com/hlky/stable-diffusion/blob/bb765f1897c968495ffe12a06b421d97b56d5ae1/scripts/webui.py)

|

||||

|

||||

You can set the `WEBUI_SHA` to [any SHA from the main repo](https://github.com/hlky/stable-diffusion/commits/main), this will build the container against that commit. Use at your own risk.

|

||||

|

||||

# Disclaimer

|

||||

## Disclaimer

|

||||

|

||||

The authors of this project are not responsible for any content generated using this interface.

|

||||

|

||||

This license of this software forbids you from sharing any content that violates any laws, produce any harm to a person, disseminate any personal information that would be meant for harm, spread misinformation and target vulnerable groups. For the full list of restrictions please read [the license](./LICENSE).

|

||||

|

||||

# Thanks

|

||||

## Thanks

|

||||

|

||||

Special thanks to everyone behind these awesome projects, without them, none of this would have been possible:

|

||||

|

||||

- [hlky/stable-diffusion-webui](https://github.com/hlky/stable-diffusion-webui)

|

||||

- [AUTOMATIC1111/stable-diffusion-webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

|

||||

- [lstein/stable-diffusion](https://github.com/lstein/stable-diffusion)

|

||||

- [InvokeAI](https://github.com/invoke-ai/InvokeAI)

|

||||

- [Sygil-webui](https://github.com/Sygil-Dev/sygil-webui)

|

||||

- [CompVis/stable-diffusion](https://github.com/CompVis/stable-diffusion)

|

||||

- [hlky/sd-enable-textual-inversion](https://github.com/hlky/sd-enable-textual-inversion)

|

||||

- [devilismyfriend/latent-diffusion](https://github.com/devilismyfriend/latent-diffusion)

|

||||

- and many many more.

|

||||

|

||||

3

cache/.gitignore

vendored

3

cache/.gitignore

vendored

@@ -1,3 +0,0 @@

|

||||

/torch

|

||||

/transformers

|

||||

/weights

|

||||

21

data/.gitignore

vendored

Normal file

21

data/.gitignore

vendored

Normal file

@@ -0,0 +1,21 @@

|

||||

# for all of the stuff downloaded by transformers, pytorch, and others

|

||||

/.cache

|

||||

# for UIs

|

||||

/config

|

||||

# for all stable diffusion models (main, waifu diffusion, etc..)

|

||||

/StableDiffusion

|

||||

# others

|

||||

/Codeformer

|

||||

/GFPGAN

|

||||

/ESRGAN

|

||||

/BSRGAN

|

||||

/RealESRGAN

|

||||

/SwinIR

|

||||

/MiDaS

|

||||

/BLIP

|

||||

/ScuNET

|

||||

/LDSR

|

||||

/Deepdanbooru

|

||||

/Hypernetworks

|

||||

/VAE

|

||||

/embeddings

|

||||

@@ -1,22 +1,11 @@

|

||||

version: '3.9'

|

||||

|

||||

services:

|

||||

model:

|

||||

build:

|

||||

context: ./hlky/

|

||||

args:

|

||||

# You can choose any commit sha from https://github.com/hlky/stable-diffusion/commits/main

|

||||

# USE AT YOUR OWN RISK! otherwise just leave it empty.

|

||||

WEBUI_SHA:

|

||||

restart: on-failure

|

||||

x-base_service: &base_service

|

||||

ports:

|

||||

- "7860:7860"

|

||||

volumes:

|

||||

- ./cache:/cache

|

||||

- ./output:/output

|

||||

- ./models:/models

|

||||

environment:

|

||||

- CLI_ARGS=--extra-models-cpu --optimized-turbo

|

||||

- &v1 ./data:/data

|

||||

- &v2 ./output:/output

|

||||

deploy:

|

||||

resources:

|

||||

reservations:

|

||||

@@ -24,3 +13,52 @@ services:

|

||||

- driver: nvidia

|

||||

device_ids: ['0']

|

||||

capabilities: [gpu]

|

||||

|

||||

name: webui-docker

|

||||

|

||||

services:

|

||||

download:

|

||||

build: ./services/download/

|

||||

profiles: ["download"]

|

||||

volumes:

|

||||

- *v1

|

||||

|

||||

auto: &automatic

|

||||

<<: *base_service

|

||||

profiles: ["auto"]

|

||||

build: ./services/AUTOMATIC1111

|

||||

image: sd-auto:31

|

||||

environment:

|

||||

- CLI_ARGS=--allow-code --medvram --xformers --enable-insecure-extension-access --api

|

||||

|

||||

auto-cpu:

|

||||

<<: *automatic

|

||||

profiles: ["auto-cpu"]

|

||||

deploy: {}

|

||||

environment:

|

||||

- CLI_ARGS=--no-half --precision full

|

||||

|

||||

invoke:

|

||||

<<: *base_service

|

||||

profiles: ["invoke"]

|

||||

build: ./services/invoke/

|

||||

image: sd-invoke:17

|

||||

environment:

|

||||

- PRELOAD=true

|

||||

- CLI_ARGS=

|

||||

|

||||

|

||||

sygil: &sygil

|

||||

<<: *base_service

|

||||

profiles: ["sygil"]

|

||||

build: ./services/sygil/

|

||||

image: sd-sygil:16

|

||||

environment:

|

||||

- CLI_ARGS=--optimized-turbo

|

||||

- USE_STREAMLIT=0

|

||||

|

||||

sygil-sl:

|

||||

<<: *sygil

|

||||

profiles: ["sygil-sl"]

|

||||

environment:

|

||||

- USE_STREAMLIT=1

|

||||

|

||||

@@ -1,71 +0,0 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM continuumio/miniconda3:4.12.0

|

||||

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

RUN conda install python=3.8.5 && conda clean -a -y

|

||||

RUN conda install pytorch==1.11.0 torchvision==0.12.0 cudatoolkit=11.3 -c pytorch && conda clean -a -y

|

||||

|

||||

RUN apt-get update && apt install fonts-dejavu-core rsync -y && apt-get clean

|

||||

|

||||

|

||||

RUN <<EOF

|

||||

git clone https://github.com/hlky/stable-diffusion.git

|

||||

cd stable-diffusion

|

||||

git reset --hard c84748aa6802c2f934687883a79bde745d2a58a6

|

||||

conda env update --file environment.yaml -n base

|

||||

conda clean -a -y

|

||||

EOF

|

||||

|

||||

# new dependency, should be added to the environment.yaml

|

||||

RUN pip install -U --no-cache-dir pyperclip

|

||||

|

||||

# Note: don't update the sha of previous versions because the install will take forever

|

||||

# instead, update the repo state in a later step

|

||||

ARG WEBUI_SHA=bb765f1897c968495ffe12a06b421d97b56d5ae1

|

||||

RUN cd stable-diffusion && git pull && git reset --hard ${WEBUI_SHA} && \

|

||||

conda env update --file environment.yaml --name base && conda clean -a -y

|

||||

|

||||

|

||||

# download dev UI version, update the sha below in case you want some other version

|

||||

# RUN <<EOF

|

||||

# git clone https://github.com/hlky/stable-diffusion-webui.git

|

||||

# cd stable-diffusion-webui

|

||||

# # map to this file: https://github.com/hlky/stable-diffusion-webui/blob/master/.github/sync.yml

|

||||

# git reset --hard 49e6178fd82ca736f9bbc621c6b12487c300e493

|

||||

# cp -t /stable-diffusion/scripts/ webui.py relauncher.py txt2img.yaml

|

||||

# cp -t /stable-diffusion/configs/webui webui.yaml

|

||||

# cp -t /stable-diffusion/frontend/ frontend/*

|

||||

# cd / && rm -rf stable-diffusion-webui

|

||||

# EOF

|

||||

|

||||

# Textual inversion

|

||||

RUN <<EOF

|

||||

git clone https://github.com/hlky/sd-enable-textual-inversion.git &&

|

||||

cd /sd-enable-textual-inversion && git reset --hard 08f9b5046552d17cf7327b30a98410222741b070 &&

|

||||

rsync -a /sd-enable-textual-inversion/ /stable-diffusion/ &&

|

||||

rm -rf /sd-enable-textual-inversion

|

||||

EOF

|

||||

|

||||

# Latent diffusion

|

||||

RUN <<EOF

|

||||

git clone https://github.com/devilismyfriend/latent-diffusion &&

|

||||

cd /latent-diffusion &&

|

||||

git reset --hard 6d61fc03f15273a457950f2cdc10dddf53ba6809 &&

|

||||

# hacks all the way down

|

||||

mv ldm ldm_latent &&

|

||||

sed -i -- 's/from ldm/from ldm_latent/g' *.py

|

||||

# dont forget to update the yaml!!

|

||||

EOF

|

||||

|

||||

|

||||

# add info

|

||||

COPY . /docker/

|

||||

RUN python /docker/info.py /stable-diffusion/frontend/frontend.py

|

||||

|

||||

WORKDIR /stable-diffusion

|

||||

ENV TRANSFORMERS_CACHE=/cache/transformers TORCH_HOME=/cache/torch CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

# run, -u to not buffer stdout / stderr

|

||||

CMD /docker/mount.sh && python3 -u scripts/webui.py --outdir /output --ckpt /models/model.ckpt --ldsr-dir /latent-diffusion ${CLI_ARGS}

|

||||

@@ -1,31 +0,0 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -e

|

||||

|

||||

declare -A MODELS

|

||||

MODELS["/stable-diffusion/src/gfpgan/experiments/pretrained_models/GFPGANv1.3.pth"]=GFPGANv1.3.pth

|

||||

MODELS["/stable-diffusion/src/realesrgan/experiments/pretrained_models/RealESRGAN_x4plus.pth"]=RealESRGAN_x4plus.pth

|

||||

MODELS["/stable-diffusion/src/realesrgan/experiments/pretrained_models/RealESRGAN_x4plus_anime_6B.pth"]=RealESRGAN_x4plus_anime_6B.pth

|

||||

MODELS["/latent-diffusion/experiments/pretrained_models/model.ckpt"]=LDSR.ckpt

|

||||

# MODELS["/latent-diffusion/experiments/pretrained_models/project.yaml"]=LDSR.yaml

|

||||

|

||||

for path in "${!MODELS[@]}"; do

|

||||

name=${MODELS[$path]}

|

||||

base=$(dirname "${path}")

|

||||

from_path="/models/${name}"

|

||||

if test -f "${from_path}"; then

|

||||

mkdir -p "${base}" && ln -sf "${from_path}" "${path}" && echo "Mounted ${name}"

|

||||

else

|

||||

echo "Skipping ${name}"

|

||||

fi

|

||||

done

|

||||

|

||||

# hack for latent-diffusion

|

||||

if test -f /models/LDSR.yaml; then

|

||||

sed 's/ldm\./ldm_latent\./g' /models/LDSR.yaml >/latent-diffusion/experiments/pretrained_models/project.yaml

|

||||

fi

|

||||

|

||||

# force facexlib cache

|

||||

mkdir -p /cache/weights/

|

||||

rm -rf /stable-diffusion/src/facexlib/facexlib/weights

|

||||

ln -sf /cache/weights/ /stable-diffusion/src/facexlib/facexlib/

|

||||

@@ -1,29 +0,0 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM continuumio/miniconda3:4.12.0

|

||||

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

RUN conda install python=3.8.5 && conda clean -a -y

|

||||

RUN conda install pytorch==1.11.0 torchvision==0.12.0 cudatoolkit=11.3 -c pytorch && conda clean -a -y

|

||||

|

||||

RUN apt-get update && apt install fonts-dejavu-core rsync -y && apt-get clean

|

||||

|

||||

|

||||

RUN <<EOF

|

||||

git clone https://github.com/lstein/stable-diffusion.git

|

||||

cd stable-diffusion

|

||||

git reset --hard 751283a2de81bee4bb571fbabe4adb19f1d85b97

|

||||

conda env update --file environment.yaml -n base

|

||||

conda clean -a -y

|

||||

EOF

|

||||

|

||||

ENV TRANSFORMERS_CACHE=/cache/transformers TORCH_HOME=/cache/torch CLI_ARGS=""

|

||||

|

||||

WORKDIR /stable-diffusion

|

||||

|

||||

EXPOSE 7860

|

||||

# run, -u to not buffer stdout / stderr

|

||||

CMD mkdir -p /stable-diffusion/models/ldm/stable-diffusion-v1/ && \

|

||||

ln -sf /models/model.ckpt /stable-diffusion/models/ldm/stable-diffusion-v1/model.ckpt && \

|

||||

python3 -u scripts/dream.py --outdir /output --web --host 0.0.0.0 --port 7860 ${CLI_ARGS}

|

||||

@@ -1,14 +0,0 @@

|

||||

# WebUI for lstein

|

||||

|

||||

The WebUI of [lstein/stable-diffusion](https://github.com/lstein/stable-diffusion) as docker container!

|

||||

|

||||

Although it is a simple UI, the project has a lot of potential.

|

||||

|

||||

## Setup

|

||||

|

||||

Clone this repo, download the `model.ckpt` and put into the `models` folder as mentioned in [the main README](../README.md), then run

|

||||

|

||||

```

|

||||

cd lstein

|

||||

docker compose up --build

|

||||

```

|

||||

@@ -1,21 +0,0 @@

|

||||

version: '3.9'

|

||||

|

||||

services:

|

||||

model:

|

||||

build: .

|

||||

restart: on-failure

|

||||

ports:

|

||||

- "7860:7860"

|

||||

volumes:

|

||||

- ../cache:/cache

|

||||

- ../output:/output

|

||||

- ../models:/models

|

||||

environment:

|

||||

- CLI_ARGS=

|

||||

deploy:

|

||||

resources:

|

||||

reservations:

|

||||

devices:

|

||||

- driver: nvidia

|

||||

device_ids: ['0']

|

||||

capabilities: [gpu]

|

||||

7

models/.gitignore

vendored

7

models/.gitignore

vendored

@@ -1,7 +0,0 @@

|

||||

/model.ckpt

|

||||

/GFPGANv1.3.pth

|

||||

/RealESRGAN_x2plus.pth

|

||||

/RealESRGAN_x4plus.pth

|

||||

/RealESRGAN_x4plus_anime_6B.pth

|

||||

/LDSR.ckpt

|

||||

/LDSR.yaml

|

||||

93

services/AUTOMATIC1111/Dockerfile

Normal file

93

services/AUTOMATIC1111/Dockerfile

Normal file

@@ -0,0 +1,93 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM alpine/git:2.36.2 as download

|

||||

|

||||

SHELL ["/bin/sh", "-ceuxo", "pipefail"]

|

||||

|

||||

RUN <<EOF

|

||||

cat <<'EOE' > /clone.sh

|

||||

mkdir -p repositories/"$1" && cd repositories/"$1" && git init && git remote add origin "$2" && git fetch origin "$3" --depth=1 && git reset --hard "$3" && rm -rf .git

|

||||

EOE

|

||||

EOF

|

||||

|

||||

RUN . /clone.sh taming-transformers https://github.com/CompVis/taming-transformers.git 24268930bf1dce879235a7fddd0b2355b84d7ea6 \

|

||||

&& rm -rf data assets **/*.ipynb

|

||||

|

||||

RUN . /clone.sh stable-diffusion-stability-ai https://github.com/Stability-AI/stablediffusion.git 47b6b607fdd31875c9279cd2f4f16b92e4ea958e \

|

||||

&& rm -rf assets data/**/*.png data/**/*.jpg data/**/*.gif

|

||||

|

||||

RUN . /clone.sh CodeFormer https://github.com/sczhou/CodeFormer.git c5b4593074ba6214284d6acd5f1719b6c5d739af \

|

||||

&& rm -rf assets inputs

|

||||

|

||||

RUN . /clone.sh BLIP https://github.com/salesforce/BLIP.git 48211a1594f1321b00f14c9f7a5b4813144b2fb9

|

||||

RUN . /clone.sh k-diffusion https://github.com/crowsonkb/k-diffusion.git 5b3af030dd83e0297272d861c19477735d0317ec

|

||||

RUN . /clone.sh clip-interrogator https://github.com/pharmapsychotic/clip-interrogator 2486589f24165c8e3b303f84e9dbbea318df83e8

|

||||

|

||||

|

||||

FROM alpine:3 as xformers

|

||||

RUN apk add aria2

|

||||

RUN aria2c -x 5 --dir / --out wheel.whl 'https://github.com/AbdBarho/stable-diffusion-webui-docker/releases/download/4.0.0/xformers-0.0.16rc393-cp310-cp310-manylinux2014_x86_64.whl'

|

||||

|

||||

FROM python:3.10-slim

|

||||

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

|

||||

RUN PIP_NO_CACHE_DIR=1 pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

||||

|

||||

RUN apt-get update && apt install fonts-dejavu-core rsync git jq moreutils -y && apt-get clean

|

||||

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

|

||||

cd stable-diffusion-webui

|

||||

git reset --hard 4af3ca5393151d61363c30eef4965e694eeac15e

|

||||

pip install -r requirements_versions.txt

|

||||

EOF

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

--mount=type=bind,from=xformers,source=/wheel.whl,target=/xformers-0.0.15-cp310-cp310-linux_x86_64.whl \

|

||||

pip install triton /xformers-0.0.15-cp310-cp310-linux_x86_64.whl

|

||||

|

||||

ENV ROOT=/stable-diffusion-webui

|

||||

|

||||

|

||||

COPY --from=download /git/ ${ROOT}

|

||||

RUN mkdir ${ROOT}/interrogate && cp ${ROOT}/repositories/clip-interrogator/data/* ${ROOT}/interrogate

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install -r ${ROOT}/repositories/CodeFormer/requirements.txt

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install opencv-python-headless \

|

||||

git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379 \

|

||||

git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1 \

|

||||

git+https://github.com/mlfoundations/open_clip.git@bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b \

|

||||

pyngrok

|

||||

|

||||

# Note: don't update the sha of previous versions because the install will take forever

|

||||

# instead, update the repo state in a later step

|

||||

|

||||

ARG SHA=2b94ec78869db7d2beaad23bdff47340416edf85

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

cd stable-diffusion-webui

|

||||

git fetch

|

||||

git reset --hard ${SHA}

|

||||

pip install -r requirements_versions.txt

|

||||

EOF

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install -U opencv-python-headless transformers>=4.24

|

||||

|

||||

COPY . /docker

|

||||

|

||||

RUN <<EOF

|

||||

python3 /docker/info.py ${ROOT}/modules/ui.py

|

||||

mv ${ROOT}/style.css ${ROOT}/user.css

|

||||

EOF

|

||||

|

||||

WORKDIR ${ROOT}

|

||||

ENV CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

ENTRYPOINT ["/docker/entrypoint.sh"]

|

||||

CMD python3 -u webui.py --listen --port 7860 ${CLI_ARGS}

|

||||

10

services/AUTOMATIC1111/config.json

Normal file

10

services/AUTOMATIC1111/config.json

Normal file

@@ -0,0 +1,10 @@

|

||||

{

|

||||

"outdir_samples": "",

|

||||

"outdir_txt2img_samples": "/output/txt2img",

|

||||

"outdir_img2img_samples": "/output/img2img",

|

||||

"outdir_extras_samples": "/output/extras",

|

||||

"outdir_txt2img_grids": "/output/txt2img-grids",

|

||||

"outdir_img2img_grids": "/output/img2img-grids",

|

||||

"outdir_save": "/output/saved",

|

||||

"font": "DejaVuSans.ttf"

|

||||

}

|

||||

63

services/AUTOMATIC1111/entrypoint.sh

Executable file

63

services/AUTOMATIC1111/entrypoint.sh

Executable file

@@ -0,0 +1,63 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

# TODO: move all mkdir -p ?

|

||||

mkdir -p /data/config/auto/scripts/

|

||||

cp -n /docker/config.json /data/config/auto/config.json

|

||||

jq '. * input' /data/config/auto/config.json /docker/config.json | sponge /data/config/auto/config.json

|

||||

|

||||

if [ ! -f /data/config/auto/ui-config.json ]; then

|

||||

echo '{}' >/data/config/auto/ui-config.json

|

||||

fi

|

||||

|

||||

# copy scripts, we cannot just mount the directory because it will override the already provided scripts in the repo

|

||||

cp -rfT /data/config/auto/scripts/ "${ROOT}/scripts"

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

MOUNTS["/root/.cache"]="/data/.cache"

|

||||

|

||||

# main

|

||||

MOUNTS["${ROOT}/models/Stable-diffusion"]="/data/StableDiffusion"

|

||||

MOUNTS["${ROOT}/models/VAE"]="/data/VAE"

|

||||

MOUNTS["${ROOT}/models/Codeformer"]="/data/Codeformer"

|

||||

MOUNTS["${ROOT}/models/GFPGAN"]="/data/GFPGAN"

|

||||

MOUNTS["${ROOT}/models/ESRGAN"]="/data/ESRGAN"

|

||||

MOUNTS["${ROOT}/models/BSRGAN"]="/data/BSRGAN"

|

||||

MOUNTS["${ROOT}/models/RealESRGAN"]="/data/RealESRGAN"

|

||||

MOUNTS["${ROOT}/models/SwinIR"]="/data/SwinIR"

|

||||

MOUNTS["${ROOT}/models/ScuNET"]="/data/ScuNET"

|

||||

MOUNTS["${ROOT}/models/LDSR"]="/data/LDSR"

|

||||

MOUNTS["${ROOT}/models/hypernetworks"]="/data/Hypernetworks"

|

||||

MOUNTS["${ROOT}/models/torch_deepdanbooru"]="/data/Deepdanbooru"

|

||||

MOUNTS["${ROOT}/models/BLIP"]="/data/BLIP"

|

||||

MOUNTS["${ROOT}/models/midas"]="/data/MiDaS"

|

||||

|

||||

MOUNTS["${ROOT}/embeddings"]="/data/embeddings"

|

||||

MOUNTS["${ROOT}/config.json"]="/data/config/auto/config.json"

|

||||

MOUNTS["${ROOT}/ui-config.json"]="/data/config/auto/ui-config.json"

|

||||

MOUNTS["${ROOT}/extensions"]="/data/config/auto/extensions"

|

||||

|

||||

# extra hacks

|

||||

MOUNTS["${ROOT}/repositories/CodeFormer/weights/facelib"]="/data/.cache"

|

||||

|

||||

for to_path in "${!MOUNTS[@]}"; do

|

||||

set -Eeuo pipefail

|

||||

from_path="${MOUNTS[${to_path}]}"

|

||||

rm -rf "${to_path}"

|

||||

if [ ! -f "$from_path" ]; then

|

||||

mkdir -vp "$from_path"

|

||||

fi

|

||||

mkdir -vp "$(dirname "${to_path}")"

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

echo Mounted $(basename "${from_path}")

|

||||

done

|

||||

|

||||

if [ -f "/data/config/auto/startup.sh" ]; then

|

||||

pushd ${ROOT}

|

||||

. /data/config/auto/startup.sh

|

||||

popd

|

||||

fi

|

||||

|

||||

exec "$@"

|

||||

14

services/AUTOMATIC1111/info.py

Normal file

14

services/AUTOMATIC1111/info.py

Normal file

@@ -0,0 +1,14 @@

|

||||

import sys

|

||||

from pathlib import Path

|

||||

|

||||

file = Path(sys.argv[1])

|

||||

file.write_text(

|

||||

file.read_text()\

|

||||

.replace(' return demo', """

|

||||

with demo:

|

||||

gr.Markdown(

|

||||

'Created by [AUTOMATIC1111 / stable-diffusion-webui-docker](https://github.com/AbdBarho/stable-diffusion-webui-docker/)'

|

||||

)

|

||||

return demo

|

||||

""", 1)

|

||||

)

|

||||

6

services/download/Dockerfile

Normal file

6

services/download/Dockerfile

Normal file

@@ -0,0 +1,6 @@

|

||||

FROM bash:alpine3.15

|

||||

|

||||

RUN apk add parallel aria2

|

||||

COPY . /docker

|

||||

RUN chmod +x /docker/download.sh

|

||||

ENTRYPOINT ["/docker/download.sh"]

|

||||

8

services/download/checksums.sha256

Normal file

8

services/download/checksums.sha256

Normal file

@@ -0,0 +1,8 @@

|

||||

cc6cb27103417325ff94f52b7a5d2dde45a7515b25c255d8e396c90014281516 /data/StableDiffusion/v1-5-pruned-emaonly.ckpt

|

||||

c6bbc15e3224e6973459ba78de4998b80b50112b0ae5b5c67113d56b4e366b19 /data/StableDiffusion/sd-v1-5-inpainting.ckpt

|

||||

c6a580b13a5bc05a5e16e4dbb80608ff2ec251a162311590c1f34c013d7f3dab /data/VAE/vae-ft-mse-840000-ema-pruned.ckpt

|

||||

e2cd4703ab14f4d01fd1383a8a8b266f9a5833dacee8e6a79d3bf21a1b6be5ad /data/GFPGAN/GFPGANv1.4.pth

|

||||

4fa0d38905f75ac06eb49a7951b426670021be3018265fd191d2125df9d682f1 /data/RealESRGAN/RealESRGAN_x4plus.pth

|

||||

f872d837d3c90ed2e05227bed711af5671a6fd1c9f7d7e91c911a61f155e99da /data/RealESRGAN/RealESRGAN_x4plus_anime_6B.pth

|

||||

c209caecac2f97b4bb8f4d726b70ac2ac9b35904b7fc99801e1f5e61f9210c13 /data/LDSR/model.ckpt

|

||||

9d6ad53c5dafeb07200fb712db14b813b527edd262bc80ea136777bdb41be2ba /data/LDSR/project.yaml

|

||||

28

services/download/download.sh

Executable file

28

services/download/download.sh

Executable file

@@ -0,0 +1,28 @@

|

||||

#!/usr/bin/env bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

# TODO: maybe just use the .gitignore file to create all of these

|

||||

mkdir -vp /data/.cache /data/StableDiffusion /data/Codeformer /data/GFPGAN /data/ESRGAN /data/BSRGAN /data/RealESRGAN /data/SwinIR /data/LDSR /data/ScuNET /data/embeddings /data/VAE /data/Deepdanbooru /data/MiDaS

|

||||

|

||||

echo "Downloading, this might take a while..."

|

||||

|

||||

aria2c -x 10 --disable-ipv6 --input-file /docker/links.txt --dir /data --continue

|

||||

|

||||

echo "Checking SHAs..."

|

||||

|

||||

parallel --will-cite -a /docker/checksums.sha256 "echo -n {} | sha256sum -c"

|

||||

|

||||

cat <<EOF

|

||||

By using this software, you agree to the following licenses:

|

||||

https://github.com/CompVis/stable-diffusion/blob/main/LICENSE

|

||||

https://github.com/AbdBarho/stable-diffusion-webui-docker/blob/master/LICENSE

|

||||

https://github.com/sd-webui/stable-diffusion-webui/blob/master/LICENSE

|

||||

https://github.com/invoke-ai/InvokeAI/blob/main/LICENSE

|

||||

https://github.com/cszn/BSRGAN/blob/main/LICENSE

|

||||

https://github.com/sczhou/CodeFormer/blob/master/LICENSE

|

||||

https://github.com/TencentARC/GFPGAN/blob/master/LICENSE

|

||||

https://github.com/xinntao/Real-ESRGAN/blob/master/LICENSE

|

||||

https://github.com/xinntao/ESRGAN/blob/master/LICENSE

|

||||

https://github.com/cszn/SCUNet/blob/main/LICENSE

|

||||

EOF

|

||||

16

services/download/links.txt

Normal file

16

services/download/links.txt

Normal file

@@ -0,0 +1,16 @@

|

||||

https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.ckpt

|

||||

out=StableDiffusion/v1-5-pruned-emaonly.ckpt

|

||||

https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.ckpt

|

||||

out=VAE/vae-ft-mse-840000-ema-pruned.ckpt

|

||||

https://huggingface.co/runwayml/stable-diffusion-inpainting/resolve/main/sd-v1-5-inpainting.ckpt

|

||||

out=StableDiffusion/sd-v1-5-inpainting.ckpt

|

||||

https://github.com/TencentARC/GFPGAN/releases/download/v1.3.4/GFPGANv1.4.pth

|

||||

out=GFPGAN/GFPGANv1.4.pth

|

||||

https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth

|

||||

out=RealESRGAN/RealESRGAN_x4plus.pth

|

||||

https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth

|

||||

out=RealESRGAN/RealESRGAN_x4plus_anime_6B.pth

|

||||

https://heibox.uni-heidelberg.de/f/31a76b13ea27482981b4/?dl=1

|

||||

out=LDSR/project.yaml

|

||||

https://heibox.uni-heidelberg.de/f/578df07c8fc04ffbadf3/?dl=1

|

||||

out=LDSR/model.ckpt

|

||||

58

services/invoke/Dockerfile

Normal file

58

services/invoke/Dockerfile

Normal file

@@ -0,0 +1,58 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM python:3.10-slim

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_EXISTS_ACTION=w PIP_PREFER_BINARY=1

|

||||

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install torch==1.12.0+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

||||

|

||||

RUN apt-get update && apt-get install git -y && apt-get clean

|

||||

|

||||

RUN git clone https://github.com/invoke-ai/InvokeAI.git /stable-diffusion

|

||||

|

||||

WORKDIR /stable-diffusion

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

git reset --hard 5c31feb3a1096d437c94b6e1c3224eb7a7224a85

|

||||

git config --global http.postBuffer 1048576000

|

||||

pip install -r binary_installer/py3.10-linux-x86_64-cuda-reqs.txt

|

||||

EOF

|

||||

|

||||

|

||||

# patch match:

|

||||

# https://github.com/invoke-ai/InvokeAI/blob/main/docs/installation/INSTALL_PATCHMATCH.md

|

||||

RUN <<EOF

|

||||

apt-get update

|

||||

# apt-get install build-essential python3-opencv libopencv-dev -y

|

||||

apt-get install make g++ libopencv-dev -y

|

||||

apt-get clean

|

||||

cd /usr/lib/x86_64-linux-gnu/pkgconfig/

|

||||

ln -sf opencv4.pc opencv.pc

|

||||

EOF

|

||||

|

||||

ARG BRANCH=main SHA=26e413ae9cf8dc04c617ca451a91a1624bfdf0c0

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

git fetch

|

||||

git reset --hard

|

||||

git checkout ${BRANCH}

|

||||

git reset --hard ${SHA}

|

||||

pip install -r binary_installer/py3.10-linux-x86_64-cuda-reqs.txt

|

||||

EOF

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

pip install -U --force-reinstall opencv-python-headless huggingface_hub && \

|

||||

python3 -c "from patchmatch import patch_match"

|

||||

|

||||

|

||||

RUN touch invokeai.init

|

||||

COPY . /docker/

|

||||

|

||||

|

||||

ENV ROOT=/stable-diffusion PYTHONPATH="${PYTHONPATH}:${ROOT}" PRELOAD=false CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

|

||||

ENTRYPOINT ["/docker/entrypoint.sh"]

|

||||

CMD python3 -u scripts/invoke.py --web --host 0.0.0.0 --port 7860 --config /docker/models.yaml --root_dir ${ROOT} --outdir /output/invoke ${CLI_ARGS}

|

||||

46

services/invoke/entrypoint.sh

Executable file

46

services/invoke/entrypoint.sh

Executable file

@@ -0,0 +1,46 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

# cache

|

||||

MOUNTS["/root/.cache"]=/data/.cache/

|

||||

|

||||

# ui specific

|

||||

MOUNTS["${ROOT}/models/codeformer"]=/data/Codeformer/

|

||||

|

||||

MOUNTS["${ROOT}/models/gfpgan/GFPGANv1.4.pth"]=/data/GFPGAN/GFPGANv1.4.pth

|

||||

MOUNTS["${ROOT}/models/gfpgan/weights"]=/data/.cache/

|

||||

|

||||

MOUNTS["${ROOT}/models/realesrgan"]=/data/RealESRGAN/

|

||||

|

||||

MOUNTS["${ROOT}/models/bert-base-uncased"]=/data/.cache/huggingface/transformers/

|

||||

MOUNTS["${ROOT}/models/openai/clip-vit-large-patch14"]=/data/.cache/huggingface/transformers/

|

||||

MOUNTS["${ROOT}/models/CompVis/stable-diffusion-safety-checker"]=/data/.cache/huggingface/transformers/

|

||||

|

||||

MOUNTS["${ROOT}/embeddings"]=/data/embeddings/

|

||||

|

||||

# hacks

|

||||

MOUNTS["${ROOT}/models/clipseg"]=/data/.cache/invoke/clipseg/

|

||||

|

||||

for to_path in "${!MOUNTS[@]}"; do

|

||||

set -Eeuo pipefail

|

||||

from_path="${MOUNTS[${to_path}]}"

|

||||

rm -rf "${to_path}"

|

||||

mkdir -p "$(dirname "${to_path}")"

|

||||

# ends with slash, make it!

|

||||

if [[ "$from_path" == */ ]]; then

|

||||

mkdir -vp "$from_path"

|

||||

fi

|

||||

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

echo Mounted $(basename "${from_path}")

|

||||

done

|

||||

|

||||

if "${PRELOAD}" == "true"; then

|

||||

set -Eeuo pipefail

|

||||

python3 -u scripts/preload_models.py --skip-sd-weights --root ${ROOT} --config_file /docker/models.yaml

|

||||

fi

|

||||

|

||||

exec "$@"

|

||||

23

services/invoke/models.yaml

Normal file

23

services/invoke/models.yaml

Normal file

@@ -0,0 +1,23 @@

|

||||

# This file describes the alternative machine learning models

|

||||

# available to InvokeAI script.

|

||||

#

|

||||

# To add a new model, follow the examples below. Each

|

||||

# model requires a model config file, a weights file,

|

||||

# and the width and height of the images it

|

||||

# was trained on.

|

||||

stable-diffusion-1.5:

|

||||

description: Stable Diffusion version 1.5

|

||||

weights: /data/StableDiffusion/v1-5-pruned-emaonly.ckpt

|

||||

vae: /data/VAE/vae-ft-mse-840000-ema-pruned.ckpt

|

||||

config: ./configs/stable-diffusion/v1-inference.yaml

|

||||

width: 512

|

||||

height: 512

|

||||

default: true

|

||||

inpainting-1.5:

|

||||

description: RunwayML SD 1.5 model optimized for inpainting

|

||||

weights: /data/StableDiffusion/sd-v1-5-inpainting.ckpt

|

||||

vae: /data/VAE/vae-ft-mse-840000-ema-pruned.ckpt

|

||||

config: ./configs/stable-diffusion/v1-inpainting-inference.yaml

|

||||

width: 512

|

||||

height: 512

|

||||

default: false

|

||||

46

services/sygil/Dockerfile

Normal file

46

services/sygil/Dockerfile

Normal file

@@ -0,0 +1,46 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

FROM python:3.8-slim

|

||||

|

||||

SHELL ["/bin/bash", "-ceuxo", "pipefail"]

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive PIP_PREFER_BINARY=1

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip pip install torch==1.13.0 torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu117

|

||||

|

||||

RUN apt-get update && apt install gcc libsndfile1 ffmpeg build-essential zip unzip git -y && apt-get clean

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

git config --global http.postBuffer 1048576000

|

||||

git clone https://github.com/Sygil-Dev/sygil-webui.git stable-diffusion

|

||||

cd stable-diffusion

|

||||

git reset --hard 5291437085bddd16d752f811b6552419a2044d12

|

||||

pip install -r requirements.txt

|

||||

EOF

|

||||

|

||||

|

||||

ARG BRANCH=master SHA=571fb897edd58b714bb385dfaa1ad59aecef8bc7

|

||||

RUN --mount=type=cache,target=/root/.cache/pip <<EOF

|

||||

cd stable-diffusion

|

||||

git fetch

|

||||

git checkout ${BRANCH}

|

||||

git reset --hard ${SHA}

|

||||

pip install -r requirements.txt

|

||||

EOF

|

||||

|

||||

RUN --mount=type=cache,target=/root/.cache/pip pip install transformers==4.24.0

|

||||

|

||||

# add info

|

||||

COPY . /docker/

|

||||

RUN <<EOF

|

||||

python /docker/info.py /stable-diffusion/frontend/frontend.py

|

||||

chmod +x /docker/mount.sh /docker/run.sh

|

||||

# streamlit

|

||||

sed -i -- 's/8501/7860/g' /stable-diffusion/.streamlit/config.toml

|

||||

EOF

|

||||

|

||||

WORKDIR /stable-diffusion

|

||||

ENV PYTHONPATH="${PYTHONPATH}:${PWD}" STREAMLIT_SERVER_HEADLESS=true USE_STREAMLIT=0 CLI_ARGS=""

|

||||

EXPOSE 7860

|

||||

|

||||

CMD /docker/mount.sh && /docker/run.sh

|

||||

32

services/sygil/mount.sh

Executable file

32

services/sygil/mount.sh

Executable file

@@ -0,0 +1,32 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

declare -A MOUNTS

|

||||

|

||||

ROOT=/stable-diffusion/src

|

||||

|

||||

# cache

|

||||

MOUNTS["/root/.cache"]=/data/.cache

|

||||

# ui specific

|

||||

MOUNTS["${PWD}/models/realesrgan"]=/data/RealESRGAN

|

||||

MOUNTS["${PWD}/models/ldsr"]=/data/LDSR

|

||||

MOUNTS["${PWD}/models/custom"]=/data/StableDiffusion

|

||||

|

||||

# hack

|

||||

MOUNTS["${PWD}/models/gfpgan/GFPGANv1.3.pth"]=/data/GFPGAN/GFPGANv1.4.pth

|

||||

MOUNTS["${PWD}/models/gfpgan/GFPGANv1.4.pth"]=/data/GFPGAN/GFPGANv1.4.pth

|

||||

MOUNTS["${PWD}/gfpgan/weights"]=/data/.cache

|

||||

|

||||

|

||||

for to_path in "${!MOUNTS[@]}"; do

|

||||

set -Eeuo pipefail

|

||||

from_path="${MOUNTS[${to_path}]}"

|

||||

rm -rf "${to_path}"

|

||||

mkdir -p "$(dirname "${to_path}")"

|

||||

ln -sT "${from_path}" "${to_path}"

|

||||

echo Mounted $(basename "${from_path}")

|

||||

done

|

||||

|

||||

# streamlit config

|

||||

ln -sf /docker/userconfig_streamlit.yaml /stable-diffusion/configs/webui/userconfig_streamlit.yaml

|

||||

10

services/sygil/run.sh

Executable file

10

services/sygil/run.sh

Executable file

@@ -0,0 +1,10 @@

|

||||

#!/bin/bash

|

||||

|

||||

set -Eeuo pipefail

|

||||

|

||||

echo "USE_STREAMLIT = ${USE_STREAMLIT}"

|

||||

if [ "${USE_STREAMLIT}" == "1" ]; then

|

||||

python -u -m streamlit run scripts/webui_streamlit.py

|

||||

else

|

||||

python3 -u scripts/webui.py --outdir /output --ckpt /data/StableDiffusion/v1-5-pruned-emaonly.ckpt ${CLI_ARGS}

|

||||

fi

|

||||

11

services/sygil/userconfig_streamlit.yaml

Normal file

11

services/sygil/userconfig_streamlit.yaml

Normal file

@@ -0,0 +1,11 @@

|

||||

# https://github.com/Sygil-Dev/sygil-webui/blob/master/configs/webui/webui_streamlit.yaml

|

||||

general:

|

||||

version: 1.24.6

|

||||

outdir: /output

|

||||

default_model: "Stable Diffusion v1.5"

|

||||

default_model_path: /data/StableDiffusion/v1-5-pruned-emaonly.ckpt

|

||||

outdir_txt2img: /output/txt2img

|

||||

outdir_img2img: /output/img2img

|

||||

outdir_img2txt: /output/img2txt

|

||||

optimized: True

|

||||

optimized_turbo: True

|

||||

Reference in New Issue

Block a user